dot product in ML

When you multiply a matrix A of size n times d with a matrix B of size d times n the resulting...

Dot Product:🔗

When you multiply a matrix of size with a matrix of size , the resulting matrix is of size . Each entry of this matrix is the dot product of the -th row of matrix with the -th column of matrix .

For matrices:

and

The resulting matrix is:

Where each is:

This is the dot product of the -th row of with the -th column of .

The dot product, in this context, measures how "similar" two vectors are. If two vectors are orthogonal (i.e., perpendicular to each other), their dot product is zero. If two vectors are in the same direction, their dot product is positive. If they are in opposite directions, the dot product is negative.

Thus, the resulting matrix essentially captures the similarity between the rows of matrix and the columns of matrix . If matrix is the transpose of matrix , then the resulting matrix captures the pairwise similarity between the rows of matrix with itself.

Similarity measures:🔗

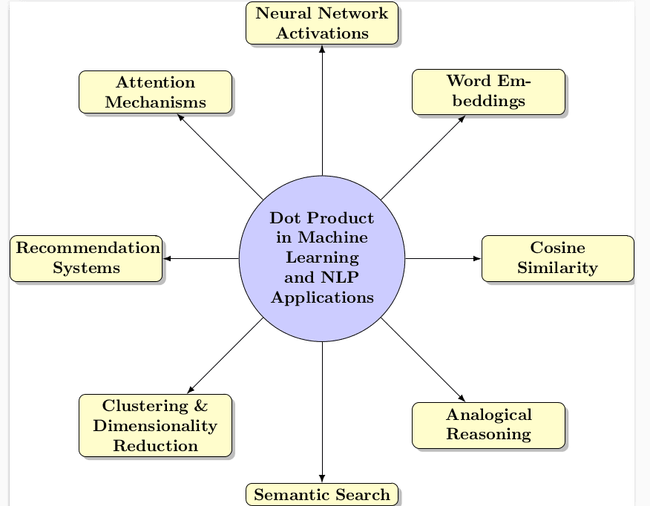

Similarity measures, especially the dot product, play a foundational role in many machine learning and NLP (Natural Language Processing) applications.

-

Cosine Similarity in Information Retrieval & Document Similarity:

- Each document can be represented as a vector where each dimension corresponds to a term (word) from a vocabulary, and the value can be the term frequency or TF-IDF (term frequency-inverse document frequency) score.

- The cosine similarity between two document vectors gives a measure of how similar the two documents are content-wise. It's called cosine similarity because it's the cosine of the angle between two vectors. If the vectors are orthogonal (angle = 90°), the cosine similarity is 0; if they point in the same direction (angle = 0°), it's 1.

-

Word Embeddings:

- In modern NLP, words are often represented as dense vectors (word embeddings) in a continuous space, e.g., Word2Vec, GloVe, and embeddings from models like BERT.

- The similarity between two word embeddings can be measured using the dot product or cosine similarity. This similarity can give semantic closeness between words. For example, "king" and "queen" would be closer in this space than "king" and "apple".

-

Neural Network Activations:

- In neural networks, especially deep learning models, the dot product is used extensively in fully connected layers, convolutional layers, etc.

- The weights in a neural network can be thought of as learning to recognize certain patterns or features. The dot product between an input vector and a weight vector can measure how much of the feature represented by the weights is present in the input.

-

Attention Mechanisms:

- In transformer-based models like BERT, GPT, etc., attention mechanisms are used to weigh the importance of different parts of an input sequence when producing an output.

- Dot products are used in these attention calculations to measure the relevance of different parts of the input to the current computation.

-

Recommendation Systems:

- User and item interactions can be represented in a matrix. Matrix factorization techniques, like Singular Value Decomposition (SVD), can decompose this matrix into user and item embeddings.

- The dot product between a user and an item embedding can predict the user's preference for that item.

-

Clustering & Dimensionality Reduction:

- Similarity measures can be used in clustering algorithms like K-means to group similar data points together.

- Techniques like t-SNE use pairwise similarities to reduce the dimensionality of data while preserving local structures.

-

Semantic Search:

- Given a query, instead of just searching for documents with exact matching words, you can search for documents whose embeddings are semantically close to the query embedding.

-

Analogical Reasoning:

- With word embeddings, analogies like "man" is to "woman" as "king" is to "queen" can be solved by vector arithmetic. This relies on the cosine similarity between word vectors.

The dot product can be thought of in two primary ways:🔗

-

Similarity:

- When both vectors being dotted are normalized (i.e., have a magnitude of 1), the dot product gives the cosine of the angle between them. This is known as the cosine similarity.

- If the dot product is 1, the vectors are identical (angle of 0°).

- If the dot product is 0, the vectors are orthogonal or perpendicular (angle of 90°), meaning they share no similarity.

- If the dot product is -1, they are diametrically opposed (angle of 180°).

-

Weighted Sum:

- The dot product can be seen as a weighted sum when one vector represents weights and the other represents values. In this interpretation, the dot product gives a single value that is the sum of products of corresponding entries of the two sequences of numbers.

- This interpretation is prevalent in neural networks where input values are weighted by learned weights.

Both interpretations are valid, and the context in which you're working determines which interpretation is more appropriate. For example, in machine learning and specifically in neural networks, the weighted sum interpretation is often more relevant, whereas in vector space models in NLP or information retrieval, the similarity interpretation is more common.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!